Ethical Considerations for AI Algorithms in Finance

Ethical Considerations for AI Algorithms in Finance

The growing adoption of artificial intelligence (AI) algorithms in the financial industry has sparked intense debate among regulators, policymakers, and practitioners about their potential impact on the sector. While AI algorithms can bring significant benefits such as improved efficiency, reduced costs, and improved risk management, they also present several ethical considerations that need to be addressed.

1. Data Bias and Equity

One of the most important issues with AI algorithms in finance is data bias and equity. If an algorithm is trained on biased data, it can perpetuate existing social inequalities and disadvantage certain groups. For example, if a loan application algorithm is trained on historical data that favors white-collar workers over marginalized communities, this could lead to unfair lending practices.

To mitigate this problem, financial firms must ensure that their AI algorithms are transparent about the data used to train them and disclose any potential biases. They should also implement robust testing procedures to verify the accuracy of their algorithms and make adjustments as needed.

2. Job displacement and economic inequality

The growing use of AI algorithms in finance has also raised concerns about job displacement and economic inequality. As machines become more adept at performing routine tasks, there is a risk that human workers could lose their jobs, leading to widespread unemployment and social unrest.

To address this problem, financial firms must invest in retraining programs for affected employees, provide support services to those who have lost their jobs, and foster entrepreneurship and innovation in underserved communities. They should also prioritize investments in AI education and training to develop the skills needed to work with machines.

3. Cybersecurity Risks

AI algorithms are vulnerable to cybersecurity risks that could compromise sensitive financial data and disrupt market operations. To mitigate this risk, financial institutions must invest in robust cybersecurity measures such as encryption, access control, and incident response plans.

They should also prioritize developing AI algorithms that are secure by design, using techniques such as homomorphic encryption and differential privacy to protect user data. In addition, they should collaborate with industry peers and regulators to share best practices for securing AI systems.

4. Transparency and Accountability

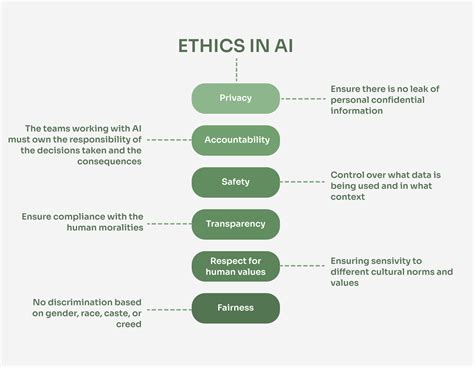

The growing reliance on AI algorithms in finance has raised concerns about transparency and accountability. As machines make decisions that affect financial outcomes, it is essential that users understand the rationale behind those decisions.

To address this issue, financial institutions must prioritize transparency and explainability of their AI algorithms through clear documentation, visualizations, and audit trails. They should also develop guidelines for responsible development and deployment of AI, ensuring that users can make informed decisions about their financial lives.

5. Regulatory Frameworks

The lack of a comprehensive regulatory framework for AI in finance poses significant risks to the sector. Existing regulations need to be adapted or expanded to address emerging challenges and concerns.

Regulators should invest in research and development to develop new standards, guidelines, and best practices for AI in finance. They should also engage with industry stakeholders to foster dialogue and collaboration on regulatory issues.

Conclusion

The use of AI algorithms in finance is a complex issue that requires careful consideration of several ethical factors.